In our previous performance test, we looked at high throughput compression, suited for log files, map-reduce data, etc. This post will deal with webserver performance, where we will investigate which gzip is best suited for a Go webserver.

Update (18 Sept 2015): Level 1 compression has been updated to a faster version, so the difference to deflate level 1 has gotten smaller. The benchmark sheet has been updated, so some of these numbers may be inaccurate.

First of all, a good deal of you might not want to use gzip at your backend level at all. A common setup is to use a reverse proxy like nginx in front of your web service, that handles compression and TLS. This has the advantage that you are handling compression in one place, and usually the reverse proxy has better performance than Go. The disadvantage is that it can place a heavy load on your reverse proxy, and usually it is a lot easier to scale the number of backend application servers than it is to scale the number of reverse proxies.

For this test I have compiled a webserver test-set. It is rather small and contains a number of html pages, css, minified javascript, svg and json files. These filetypes are selected on purpose since they are good compression targets. I have not included precompressed files like jpeg, png, mp3 or similar formats, since compressing these types are just a waste of CPU cycles. I would expect you to not attempt to compress these types of content, so that is why these are not included in the test-set.

I have added the results to the same sheet as the previous results. To see them switch to the “Web content” tab.

In this benchmark the test set is run 50 times, which gives a total of 27,400 files, with a total of 240,800,700 bytes for each test with an average size of a little more than 8KB. Source code of the test program. It is important to note that the benchmark is running sequentially, so only one core is every used. That means you can multiply the files per second by the number of physical cores in your server.

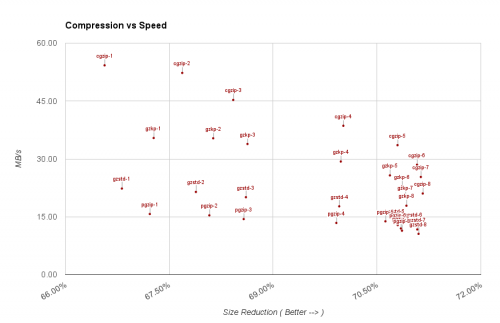

The standard library gzip has a peak single cores performance of 2664 files/second. Performance doesn’t degrade significantly until after level 4, but compression improves a little for each step. Based on this test set I would not recommend using it at any level bigger than 5, since there are only very small gains.

The pgzip package is confirmed to be bad at these file sizes. As the documentation also states, do not use pgzip for a webserver, only for big files.

My modified gzip package, is consistently around 1.6 times faster than the standard library. At levels below 5 it has better compression. At higher levels it has about 0.5% lower compression at similar level to the standard library. If this is a concern, use a higher compression level, which gives both better compression and speed. Below level 3 there is little speedup, and above compression level 5 there is no big benefit, so level 3, 4 or 5 seems to be a sweet spot.

Update: The new “Snappy” level 1 compression, offers the best speed available (2.2x the standard library), but at a slight compression loss. This seems like a very good option for bigger load servers.

The cgo cgzip package performs really well here. Except level 1, it has around 30-50% additional throughput compared to the modified library, with the best compression at levels 4+. At level 1 it is clearly the fastest, with only a 0.5% deficit in compression size. It seems like levels 2 to 5 are the best options. So cgzip remains the best option for webservers, if cgo/unsafe is an option for you, but the difference to a clean Go solution has been minimized.

Re-using Gzip Writers

An important performance thing to consider is that there is a considerable overhead when creating a new Writer for your compressed content. When dealing with small data sizes like this, it can significantly eat into your performance.

Let’s look at the relevant code for a very simple http wrapper, taken from here.

// Wrap a http.Handler to support transparent gzip encoding.

func NewHandler(h http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

[...]

gz := gzip.NewWriter(w)

defer gz.Close()

h.ServeHTTP(&gzipResponseWriter{Writer: gz, ResponseWriter: w}, r)

})

}

The important aspect here is that a Writer is created on EVERY request. In some simple tests this reduces throughput of approximately 35%. A very simple way to avoid this is to re-use Writers. This can however be tricky, since each web request is on a separate goroutine, and we want multiple to run at the same time.

This is however a very good use of a sync.Pool, so you can re-use Writers between goroutines without any pain. Here is how the modified handler looks:

// Create a Pool that contains previously used Writers and

// can create new ones if we run out.

var zippers = sync.Pool{New: func() interface{} {

return gzip.NewWriter(nil)

}}

// Wrap a http.Handler to support transparent gzip encoding.

func NewHandler(h http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

[...]

// Get a Writer from the Pool

gz := zippers.Get().(*gzip.Writer)

// When done, put the Writer back in to the Pool

defer zippers.Put(gz)

// We use Reset to set the writer we want to use.

gz.Reset(w)

defer gz.Close()

h.ServeHTTP(&gzipResponseWriter{Writer: gz, ResponseWriter: w}, r)

})

}

This is a simple change and in web server workloads it will give you much better performance.

Edit: For a more complete package, take a look at the httpgzip package by Michael Cross.

Which types should I compress?

As we have covered earlier, simply gzipping all content is a waste of CPU cycles both on the server and the client, so spotting the correct content to gzip is important. The easiest way to spot compressible content is to look at the mime-types of your content.

Here is a small whitelist that is safe to start with. You can add more, if you have specific types you use on your server.

application/javascript

application/json

application/x-javascript

application/x-tar

image/svg+xml

text/css

text/html

text/plain

text/xml

Precompress your input

While I risk stating the obvious, the best way to avoid compression cost on your server is to do it in advance on static content.

Static content can be pre-compressed, and usually when creating a static file server, I add functionality where you can place a .gz file alongside the uncompressed input, which can then be served directly from disk.

- Client requests “/static/file.js”

- Server checks if client accepts gzip encoding

- If so, see if there is a “/static/file.js.gz”

- If so, set Content-Encoding and serve the gzipped file directly from disk

All stages should of course have appropriate fallbacks. For pre-compressing your static content I can recommend the 7-zip compressor, which produces slightly smaller files with maximum compression settings.

In the final part I will show a near constant time compressor, that heavily trades compression for more predictable speed.