Last week Backblaze published a blog post on their usage of Reed-Solomon Erasure coding to maintain data available even if some of their storage servers goes offline. They also released a java library with the code they use for creating the reed-solomon sets.

I have always been fascinated by Reed-Solomon Erasure coding, but so far I have never understood completely what goes on under the hood. So I saw this as a good opportunity to learn about it, and I decided to create a Go port of the library.

You can find the package with documentation on the GitHub project page.

What is it good for?

Reed-Solomon Erasure Coding allow you to split your data into smaller segments and create “parity data”, that allow you to reconstruct your data, if some of the original data goes missing. In reality it is much more complicated than that. I would recommend that you look at the good introduction on the Backblaze blog or if you need a more theoretical introduction the Wikipedia article (of which I only understand something like 10%).

Programming the Package

Thankfully the original java library was very nicely written, so porting it was pretty easy, and after porting the tests written for the library it was running pretty well. Performance was nowhere near the Java version, but after a few modification it was decent.

I planned on doing assembler work to speed it up. At least that would help me avoid the constant bounds checks that was a major part of the performance deficit. But in my research to see if there was some assembler already written, I found a presentation called Screaming Fast Galois Field Arithmetic. Without getting too technical, it describes a method to do the “slow” part of the encoding using SSE3 assembler.

With some of the optimizations described, I was able to get the “pure Go” implementation on par with the Java version in terms of speed. But using assembler, the performance jumped through the roof. When using the assembler version the performance is now usually around an order of magnitude faster than what is possible without assembler.

Long story short, it is capable of encoding more than 1GB per second per CPU core. If you are interested in raw numbers they you can see the performance numbers on the project page.

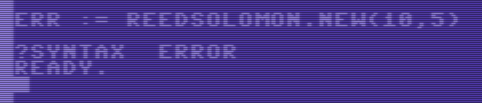

Designing the Interface

Most packages for Erasure Coding are pretty technical, and to people like me without a strong math background they can seem a bit scary. So my main intention was to

- Create a simple interface without too many technical details.

- Try not to make too many assumptions on usage.

- Good documentation

I am only sure I achieved part 3), although I cannot see how I could make 1) and 2) better. So if you have any feedback, feel free to add it below.

The package

You can find the package with documentation on the GitHub project page.

If you find any issues, or have suggestions for improvements feel free to write here or on GitHub issue.

I hope you will find it useful!

/Klaus