Update 2015:

This is a re-post of a 2010 article I wrote for the Rawstudio blog, which at the time of writing is off-line.

- L1 cache sizes have not changed at all in 5 years.

- SSE 4.1 is more commonplace for flooring, but SSE2 is still needed for fallback.

- Intel has gather/scatter instructions on their newest CPUs, which helps lookups.

- It is no longer faster to transfer XMM->reg through memory.

So overall, only a few things have changed in five years.

Interpolated look-up tables using SSE2

Something that you are very likely to encounter, when writing SSE2 assembler is the issue of using tables for looking up data. While this is a very simple operation in C, it presents a challenge with a lot of pitfalls, when using SSE2.

When to use lookup tables?

In general, avoid them, if it is within the possibility. Let’s have a look at a simple example:

const float ushort_to_float[65535];

float convert_unsigned_short_to_float(unsigned short value) {

return ushort_to_float[value];

}This is quite tempting in C – especially if you can do some transformations on the value, by modifying the lookup table, if you need something expensive like the square root, use the inverted value. But lets look at the drawbacks.

The table is 256KB, so you will run out of level 1 cache on most CPUs. In C the penalty for a value in L1 cache this is usually a couple of cycles per value, but if it has to be fetched from L2, we are talking about 15 cycles or more on most platforms. But if it saves you a division and a square root or something similar the trade-off may be ok for you.

In SSE2, the trade-off picture is changed. Here the penalty per pixel for doing the lookup remains the same (or even rises), while calculating the value is usually only about 25% of cost of what the C-code. So you can see – we can do a lot more calculations in SSE2 before using a table should even be considered.

What if I have to use lookup tables?

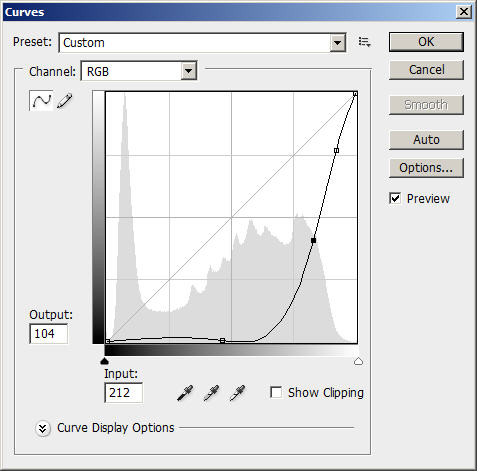

There might be cases, where using tables are the only way, because calculating the values simply aren’t practically possible in SSE2. A practical example of this from Rawstudio is the curve correction, that involves mapping input values to output values based on an n-point 2D curve.

In the “old days”, we used a table with 65535 16 bit entries, that simply gave the output value, much as the example above. The table was 128KB, so again, we had a lot of L1 cache misses.

When we re-wrote colour correction, everything internally was converted to float, which made the table 256KB, and even slower. Furthermore it also made sure that we lost a lot of the float precision, by having to fit the float values into 65535 slots.

So we got two problems:

- Bad precision

- Table too large for L1 cache

Using lookup tables for float data

Since most of these lookups are fairly linear, without too sudden peaks, usually we are fairly in the clear if we reduce the precision of the table, and do linear interpolation between them instead. This avoids posterizing the image data, because all float values will have a unique output. Here is an example, where we use a curve correction with only 256 input/output values, and interpolate them instead.

float curve_samples[257];

// Make sure we have an extra entry, so we avoid an

// "if (lookup > 255)" later.

curve_samples[256] = curve_samples[255];

for (all pixels...) {

// Look up and interpolate

float lookup = CLAMP(input * 256.0f, 0.0f, 255.9999f);

float v0 = curve_samples[(int)lookup];

float v1 = curve_samples[(int)lookup + 1];

lookup -= floorf(lookup);

output = v0 * (1.0f - lookup) + v1 * lookup;

}So now the table is only 1KB in size and fits very nicely within the Level 1 cache. This approach solves both our problems, but at the expense of some calculations. Whether 256 entries are enough is up for you to decide, but the principle applies for all sizes. The “floor” function can be quite slow on some compilers/CPUs, but it is here to demonstrate the algorithm. We will touch this later.

SSE2 Implementation

So now we are ready for putting a version together in SSE2. I mentioned that we would look separately at the “floor” function, so lets start by looking at that.

SSE2 Floor function

In SSE2 there isn’t any instruction that can do float rounding. It has been added in SSE 4.1, but that is only available on a minority of computers.

The most obvious way is to do a float -> int -> float conversion. Unfortunately, if you look at the instruction timing of the “cvtps2dq” and “cvtdq2ps” it has a latency of up to 50 cycles on Pentium Netburst (P4) and AMD K8. That means you risk having to wait 100 cycles before the result is available. Intel Core 1 and newer have much better timing for these instructions, so in most cases it is not a huge problem.

But digging around on the internet, I found this rather nice trick, that uses the float point point trick, that will overflow 32 bit float to the point where all information below the single digits is rounded off.

static const float _two_to_23_ps[4] __attribute__ ((aligned (16))) = { 0x1.0p23f, 0x1.0p23f, 0x1.0p23f, 0x1.0p23f };

/* Floor for positive numbers */

static inline __m128 _mm_floor_positive_ps( __m128 v )

{

__m128 two_to_23_ps = _mm_load_ps(_two_to_23_ps);

return _mm_sub_ps( _mm_add_ps( v, two_to_23_ps ), two_to_23_ps );

}What is does is pretty simple, it adds a “very large number” and subtracts it right away. In C terms it does this:

float output = (input+0x1.0p23f)-0x1.0p23f;On Core 2, it has the same timing as a conversion, but it is considerably faster on older systems, assuming you can hide the cost of the load in other code. Do note, you can NOT use this trick in C, since x87 float float operation have greater internal precision. I have only tested this with positive numbers, I think it will have to be modified to work with negative ones too (masking out the sign and re-applying it).

Another this you will have to do, is to adjust the rounding mode. This is pretty simple using intrinsics though:

int _mm_rounding = _MM_GET_ROUNDING_MODE();

_MM_SET_ROUNDING_MODE(_MM_ROUND_TOWARD_ZERO);

(... all processing code goes here...)

_MM_SET_ROUNDING_MODE(_mm_rounding);Implementing the curve adjustment

So lets move on to the actual implementation of the algorithm described in the first part of this article. The first thing we need is a couple of constants:

static float _twofiftysix_ps[4] __attribute__ ((aligned (16))) = {255.9999f,255.9999f,255.9999f,255.9999f};

static float _ones_ps[4] __attribute__ ((aligned (16))) = {1.0f, 1.0f, 1.0f, 1.0f};Here is the algorithm. Input and output is “__m128 v”, which contains 4 values to look up and are values between 0 and 1.

/* Convert v to lookup values and interpolate */

int xfer[4] __attribute__ ((aligned (16)));

__m128 v_mul = _mm_mul_ps(v, _mm_load_ps(_twofiftysix_ps));

__m128i lookup = _mm_cvtps_epi32(v_mul);

_mm_store_si128((__m128i*)&xfer[0], lookup);

/* Calculate fractions */

__m128 frac = _mm_sub_ps(v_mul, _mm_floor_positive_ps(v_mul));

__m128 inv_frac = _mm_sub_ps(_mm_load_ps(_ones_ps), frac);

/* Load two adjacent curve values and interpolate between them */

__m128 p0p1 = _mm_castsi128_ps(_mm_loadl_epi64((__m128i*)&curve[xfer[0]]));

__m128 p2p3 = _mm_castsi128_ps(_mm_loadl_epi64((__m128i*)&curve[xfer[2]]));

p0p1 = _mm_loadh_pi(p0p1, (__m64*)&curve[xfer[1]]);

p2p3 = _mm_loadh_pi(p2p3, (__m64*)&curve[xfer[3]]);

/* Pack all lower values in v0, high in v1 and interpolate */

__m128 v0 = _mm_shuffle_ps(p0p1, p2p3, _MM_SHUFFLE(2,0,2,0));

__m128 v1 = _mm_shuffle_ps(p0p1, p2p3, _MM_SHUFFLE(3,1,3,1));

v = _mm_add_ps(_mm_mul_ps(inv_frac, v0), _mm_mul_ps(frac, v1));There are several interesting points in this piece of code, but lets start by looking at the overall picture:

- For 4 pixels we have 4 lookups into the curve table, because we load the two adjacent values with a single 64 bit load.

- All the math in the “Calculate fractions” is independent from much of the code above and below, so the cost of this calculation is basically hidden by the memory lookups on superscalar CPUs.

- “shuffle_ps” (shufps) isn’t the fastest operation in the world, but I haven’t found any good alternatives in SSE2.

But there are still a few questions that remain unanswered.

Why are you transferring lookup values through memory?

This might seem a bit strange, but unfortunately the alternatives are quite slow. The most obvious alternative is “pextrw”. This instruction have a latency of 2.5 cycles on new platforms and considerably more on Netburst/AMD K8.

All modern CPUs have a feature called “store forwardning”. There are a lot of restrictions on this, but what it basically means is that if you store an aligned value, and read aligned values, the CPU will bypass the cache and forward the value directly. So again, this method is considerably faster on older systems, but if you do a separate SSE 4.1 version you might want to use pextrw instead.

Aren’t unaligned loads of 64 bit value slow?

Yes – you are absolutely correct, and that brings us to the last optimization possibility. Right now there are two things that slow this algorithm down:

- Unaligned 64 bit loads (50% of all loads)

- Cache splits (1 in 16 loads)

The first issue is not optimal, but the second issue is pretty bad – the penalty is more than 20 cycles on Intel CPUs. But luckily we can fix the issue by re-ordering our data.

The basic principle is that you duplicate each pair of values, so you create a table with the following layout:

new_curve[0] = curve[0]; /* first pair */

new_curve[1] = curve[1];

new_curve[2] = curve[1]; /* second pair */

new_curve[3] = curve[2];

new_curve[4] = curve[2]; /* third pair */

....

Obviously this doubles the size of the lookup table, but for this example we are still well within level 1 cache size. The “new_curve” array must be 8 byte aligned to get the desired effect. So we modify the lookups to this:

/* Load two adjacent curve values and interpolate between them */

__m128 p0p1 = _mm_castsi128_ps(_mm_loadl_epi64((__m128i*)&new_curve[xfer[0]*2]));

__m128 p2p3 = _mm_castsi128_ps(_mm_loadl_epi64((__m128i*)&new_curve[xfer[2]*2]));

p0p1 = _mm_loadh_pi(p0p1, (__m64*)&new_curve[xfer[1]*2]);

p2p3 = _mm_loadh_pi(p2p3, (__m64*)&new_curve[xfer[3]*2]);This solves both our problems, since all our loads are aligned and we don’t read across cache line boundaries.

“Preheating” lookup tables

A final issue when dealing with lookup tables is to preheat – or pre-load the values before you start using them. If the table values are not in cache, you will get a massive penalty when you hit a value that hasn’t been used before.

Lookup tables are not accessed in a linear fashion, so the hardware prefetcher will not be able to predict which values you will be needing next. Therefore you are quite certain that the value will be read from memory, which gives a penalty of more than 100 cycles. To avoid this you simply read all the values in the table in a linear fashion with a simple loop:

float unused = 0;

const int cache_line_bytes = 64;

/* Preloads cache with lookup data */

for (int i = 0; i < 514; i+=(cache_line_bytes/sizeof(float)))

unused += new_curve[i];

Be sure your compiler doesn’t optimize out your load, because it thinks you wont be using the value. The cache line size is 64 bytes on most modern machines, and as far as I recall no SSE2 machines have a cacheline size less than 64 bytes.

This optimization is also one that can be used in your C-code, but you might want to use a cacheline size of 32 bytes to accommodate older machines like Pentium 3 and AMD K7.

Conclusion & More Information

So by now we managed to turn a relatively simple operation into something quite complex, but it should also be faster and yield better quality, and fit within an SSE2 render chain. Here are some things to get more information:

- Intel® 64 and IA-32 Architectures Optimization Reference Manual – the ultimate guide for optimizing. Years of learning here.

- Software Optimization Guide for AMD AMD edition of the document above. These two mainly contain the same information.

- Diary Of An x264 Developer – has some very good optimization stories. Edit: Original site is down, so archive link used.

- Avery Lee’s blog on Virtualdub – also some very good performance tips and stories.